Introduction

As artificial intelligence (AI) models are increasingly capable of producing more life-like content, there has been an understandable hesitancy regarding “deepfakes” and their potential negative impacts. Of note, not all synthetic media are deepfakes. Synthetic media refers to a form of media (pictures, video, audio, text, etc.) that is created at least in-part by AI/machine learning tools. In most contexts, when the term “deepfake” is used, it has a malicious association usually in reference to when someone has digitally altered the face and or body of an individual in a video in order to spread false information.

While it’s readily apparent that actors can use synthetic media to perpetuate a wide array of harms, synthetic content in itself is just a tool–like the internet, social media, and other technological inventions that have come before. Therefore synthetic content is neither inherently positive or negative. Its impact depends on the actor using the tool and their intention.

While there are cases where synthetic content is used for nefarious purposes, for instance Combatting AI-Generated CSAM, this paper will focus on positive use cases of synthetic content.

Methodology

This paper uses two main sources to understand synthetic content and is part of a larger research portfolio used to create the game, The Deepfake Files. First, we interviewed seventeen experts in the field. These experts included cybersecurity experts, computer science researchers, nonprofit leaders, and government employees and were primarily based in the United States. Interviews were confidential, semi-structured, and lasted approximately thirty minutes each.

In doing this research, we found consistent themes arise, encapsulating ways in which the effects of deepfakes could be mitigated, both in a technical and non-technical capacity. Many of these common themes are reflected in the game, The Deepfake Files.

This paper also relies on a second mode of data, namely secondary analysis of peer-reviewed journals, popular press, and similarly vetted resources.

Communication

Synthetic content can enhance communication. An interviewee shared that their colleague who is a lawyer uses AI to translate videos of them explaining updates about their clients' case proceedings into the primary language of the client. This exemplifies how in urgent situations like court proceedings, medical emergencies or natural disasters, synthetic AI translation can be used to share information rapidly. In Louisiana, this strategy has also been implemented at 911 call centers. Previously, the call center used services of a language translation company that would be on the call to translate non-English speech. In emergency situations, every moment is essential. The implementation of using AI to create a synthetic translation while preserving the original audio has made translation “up to 70% faster.” Synthetic translation is being used for emergency response in other cities as well. In San Jose, one of the nation’s most linguistically diverse cities, the implementation of synthetic translation created an improvement from 60% to 98% accuracy in translation.

In the professional context, synthetic content can also be a productive tool. With many professional meetings and events being attended remotely, the connectivity that can make a speaker compelling is often lost. NVIDIA released Maxine, a suite of tools to address this issue. One of the tools included in Maxine synthetically augments the visual of the person speaking to improve their eye contact with their virtual audience. While there is debate about if features like this should be used, a preliminary study from Indiana University found that some users who self-identified as neurodivergent found the eye contact correcting feature allowed them to “confidently communicate with neurotypical individuals,” and others were in support of a tool that made their colleagues or relatives more comfortable.

Researchers also found that AI models could be used to generate videos of people communicating an intended message through sign language. There is a limited number of sign language experts, and creating a system that can correctly interpret audio and translate it into sign language with the full emphasis, timing, and emotions is no easy task. However, there is a growing dataset of video recordings of people using sign language that these systems can be trained on. Early studies on the efficacy of the synthetic content, converting audio into videos of people signing, generated by models using this dataset suggests the synthetic visuals generated were realistic enough that algorithms could not tell them apart from videos of real people signing. Using this dataset in the future to, for instance, generate interpreters during a live event, will allow more people from Deaf and hard of hearing communities to join in and experience mediums that were previously only auditory.

For people who will lose their voice due to disease, companies like Acapela Group can synthetically create a personalized voice. Their product called “my own voice” is trained on recordings from the individual, or a closely related loved one, to ensure that once their voice is lost, their communication will still carry a similar melody to how they spoke before. Technology like this is already making an impact on personal and public life. In July 2024, Former Congresswoman Jennifer Wexton (VA), used this technology to speak with President Joe Biden in the oval office when he signed the National Plan to End Parkinson’s Act and later that month gave the first speech with an AI generated voice on the House floor. The use of this technology granted new life to her voice after quickly losing her own following her diagnosis of progressive supranuclear palsy in 2023. Luckily, as a public figure, Congresswoman Wexton had enough recordings of past speeches to train the AI voice generator. She jokes that it is more formal than how she normally speaks at home and wouldn’t use it to ask her husband to “pass [her] the ketchup.”

Medicine

Synthetic content is also emerging as a promising tool in the medical field with potential applications in therapy. People with Alzheimer’s disease may struggle to identify their loved ones if they don’t appear as the person with Alzheimer’s remembers them. Generating synthetic content using visuals of a loved one from an era the person with Alzheimer’s remembers facilitates comfortable communication. This is significant because those with Alzheimer’s can often feel stressed or overwhelmed when perceiving themselves as surrounded by strangers.

Synthetic content can also lend a helping hand in areas of medicine outside of therapy. AI systems that are built with the intention of identifying issues, for instance Magnetic Resonance Imaging (MRI) of tumors, needs tremendous amounts of data to train on. Generative adversarial networks (GANs) can create images (themselves being trained on real MRI images) that are sufficient for use in training systems that are built to identify tumors. In a comparison between an identification AI system that was trained on real MRI scans and another AI system that was trained on 90% images created with GANs and 10% real images, they performed at the same level of identification.

Synthetic content can also play a productive role in fundraising for medical needs, enhancing messaging to achieve greater reach and impact. In a 2019 campaign that used both “visual and voice-altering” synthetic content, David Beckham and synthetically edited versions of him were featured in a “Malaria must die” campaign. They spoke in nine languages, English, Spanish, Kinyarwanda, Arabic, French, Hindi, Mandarin, Kiswahili and Yoruba, and encouraged viewers to add their voice to the petition. The total petition, with voices from around the world, was delivered to the UN General Assembly which has historically provided a majority of malaria funding. This campaign was so successful, reaching roughly half a billion people, that using synthetic content (and working with David Beckham) has been a continued strategy of Malaria No More, UK.

Privacy

At the nexus of medical practices and concerns about privacy, synthetic content can be a valuable tool. Medical data is a hot commodity, as being able to create connections between diagnoses and treatments is a key to unlocking more successful healthcare. But sharing patient records for the sake of larger research can violate a patient's privacy. Traditional ways of keeping a patient's identity hidden through blurring, covering, or pixelating faces and recognizable features past recognition can misconstrue valuable diagnostic information. However, processes including generating synthetic content, can be used to mask the identity of individuals while training a system to keep facial expressions and maintain the relevant medical information. This protects patient privacy without obscuring key body movements or other physical data that is essential for diagnostics, unlocking a wealth of data that could lead to helpful findings.

Using similar methods to protect the privacy of individuals by obscuring their identity with synthetic generated visuals can also be used to protect those escaping persecution while still adding their story to the narrative. The movie Welcome to Chechnya (2020), tells the stories of individuals being persecuted by an anti-LGBTQ campaign in Russia. With the help of partially synthetic content, specifically facial overlay of volunteers, interviewees in Welcome to Chechnya were able to share their experiences and spread awareness, without the cost of their privacy and greater risk of persecution.

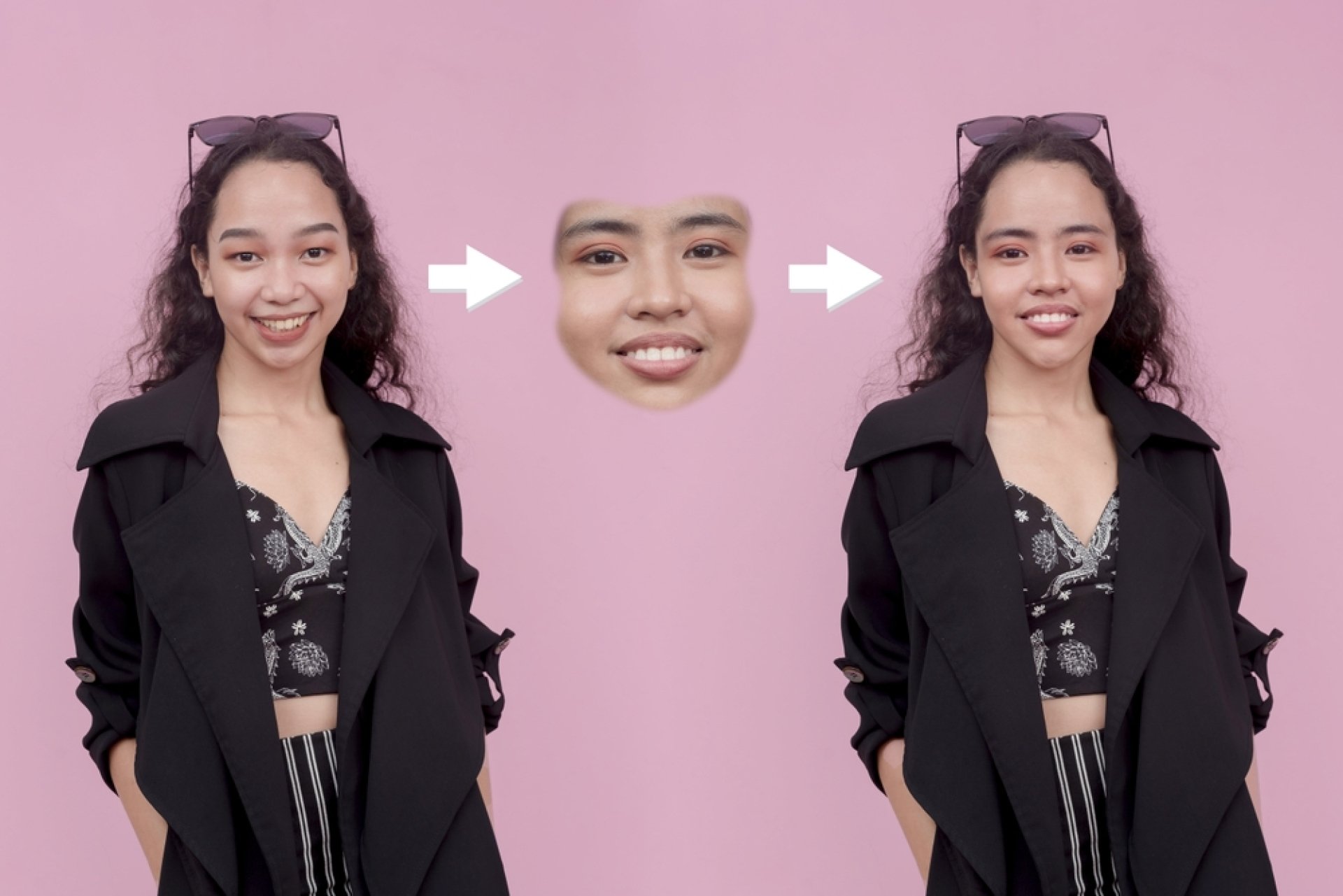

Protecting individuals' identity in daily activities can also be aided by synthetic content. For instance, facial recognition is often identified as one of the most invasive practices. But what if facial recognition could be paired with synthetic content to provide greater privacy?

Researchers at Intel Labs and Binghamton University explored the concept of a “privacy–enhancing anonymization system” that allows users to have control over which photos their face is recognizable in. In photos where an individual does not consent to the shared audience, the system would use synthetic content to create a new version of the photo where the facial features of the individual are changed. Imagine that your friend posts a photo that you are in on their social media. You are connected to some members of their network but some of them you are not. If your anonymization system setting only allowed for your connections to see pictures of you, the friends you have in common would see the original photo, while people you are not connected with would see a version of the picture with a synthetically generated face over the original. The methods used are “novel face synthesis” in addition to GANs to identity swap. This combination makes sure that while the facial features are changed, their expression remains the same. This could be applied to photo sharing on social media, where depending on who posts a picture and the different authorizations of face sharing of each person in the photo, the version of the picture that one sees may vary. With more work in this area, anonymization systems like this can be used to counter cyber violence against women, marginalized groups, and protect the privacy of children.